published on Saturday July 13, 2019 00:07:00

I haven't been blogging for a long time and decided to write one today. As a data engineer at Agoda, one of my day to day tasks includes supporting users from various teams.

To give you some background, our team has ETL tools (import/export data from/to Hadoop), data discovery web application, and customized version of HUE with some extra features.

The problem

One of my colleagues from BI team has some issue with our tool last Thursday. She has an ETL job

(essentially a Sqoop job) to import data from Postgres to Hive table. She noticed that her target

table has some column renamed. The column name from the source was native, but the column name in

target table in Hive was _native. She also has another ETL job that select data from the target

table created by the previous ETL job which failed because column names are different.

Investigating the problem

At first glance, I think Sqoop or something must have renamed native column to _native because

it sounds like a special keyword or something. I Googled a list of reserved words in Postgres,

Hive, and Sqoop but couldn't find native as a reserved word.

Since I have cloned Sqoop source code in my local machine, I can just search some code with

$ ag "\bnative\b"and found 8 matches from the repo. Sweet! 😊

Search result looks something like this:

CHANGELOG.txt

24: * [SQOOP-1429] - Fix native characters usage for SqlServer object names

...

src/java/org/apache/sqoop/orm/ClassWriter.java

96: JAVA_RESERVED_WORDS.add("native");

src/test/com/cloudera/sqoop/orm/TestClassWriter.java

344: assertEquals("_native", ClassWriter.toJavaIdentifier("native"));I can clearly see that, as expected, Sqoop did rename the column native to _native. But why?

After reading these files from Sqoop source code:

- src/java/com/cloudera/sqoop/orm/ClassWriter.java

- src/java/org/apache/sqoop/orm/ClassWriter.java

- src/test/com/cloudera/sqoop/orm/TestClassWriter.java

as well as this blog post, I can now understand why it does that. Roughly speaking, when you use Sqoop import, it will generate ORM classes based on your data model from the source and use these classes in MapReduce job.

Some experimentation

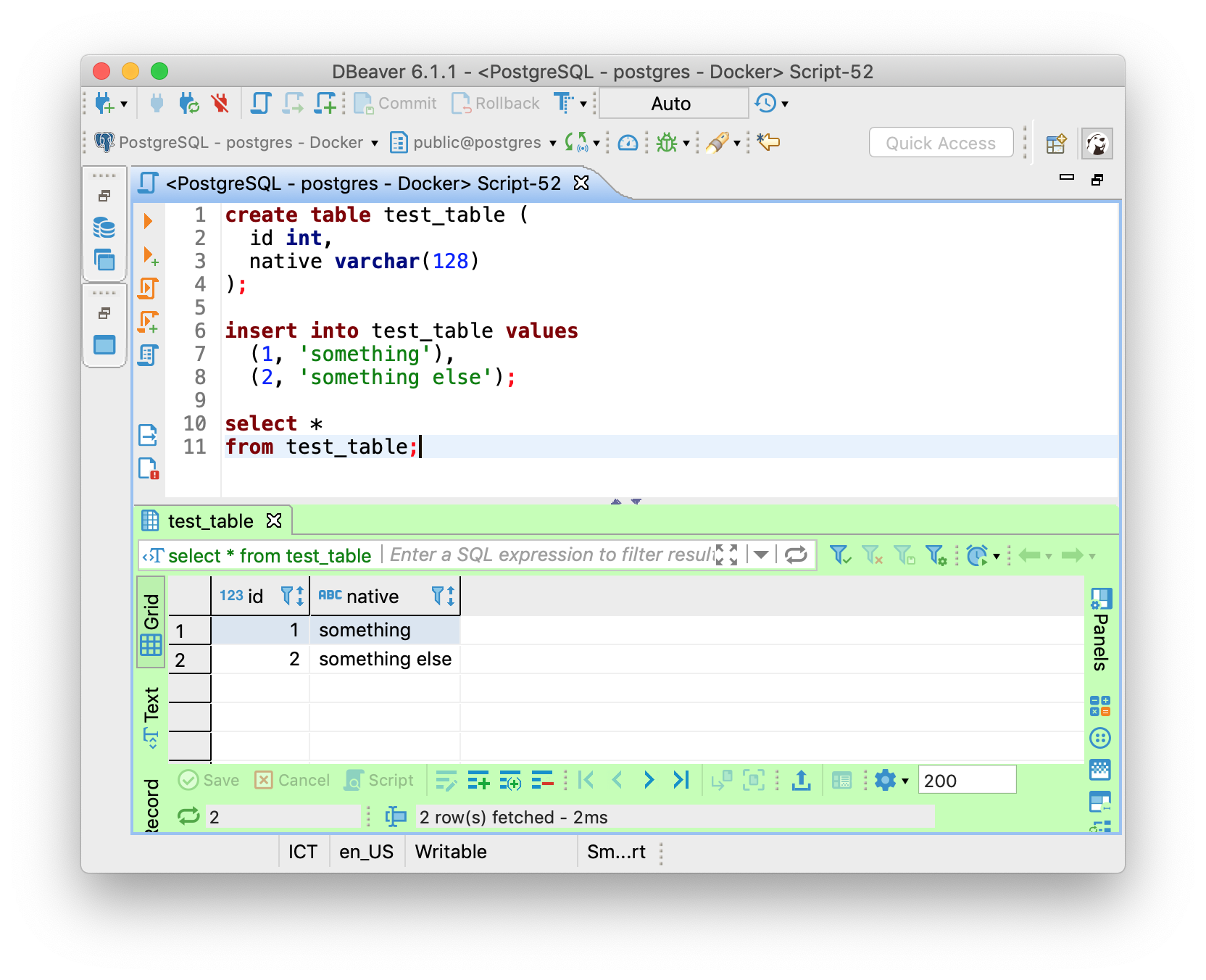

1. Set up test data on Postgres

2. Run Sqoop codegen command

$ sqoop codegen \

--driver org.postgresql.Driver \

--connect jdbc:postgresql://localhost/postgres \

--username postgres \

--table test_tableSqoop import do this step internally, but I run only codegen command because I just want to see

the generated class.

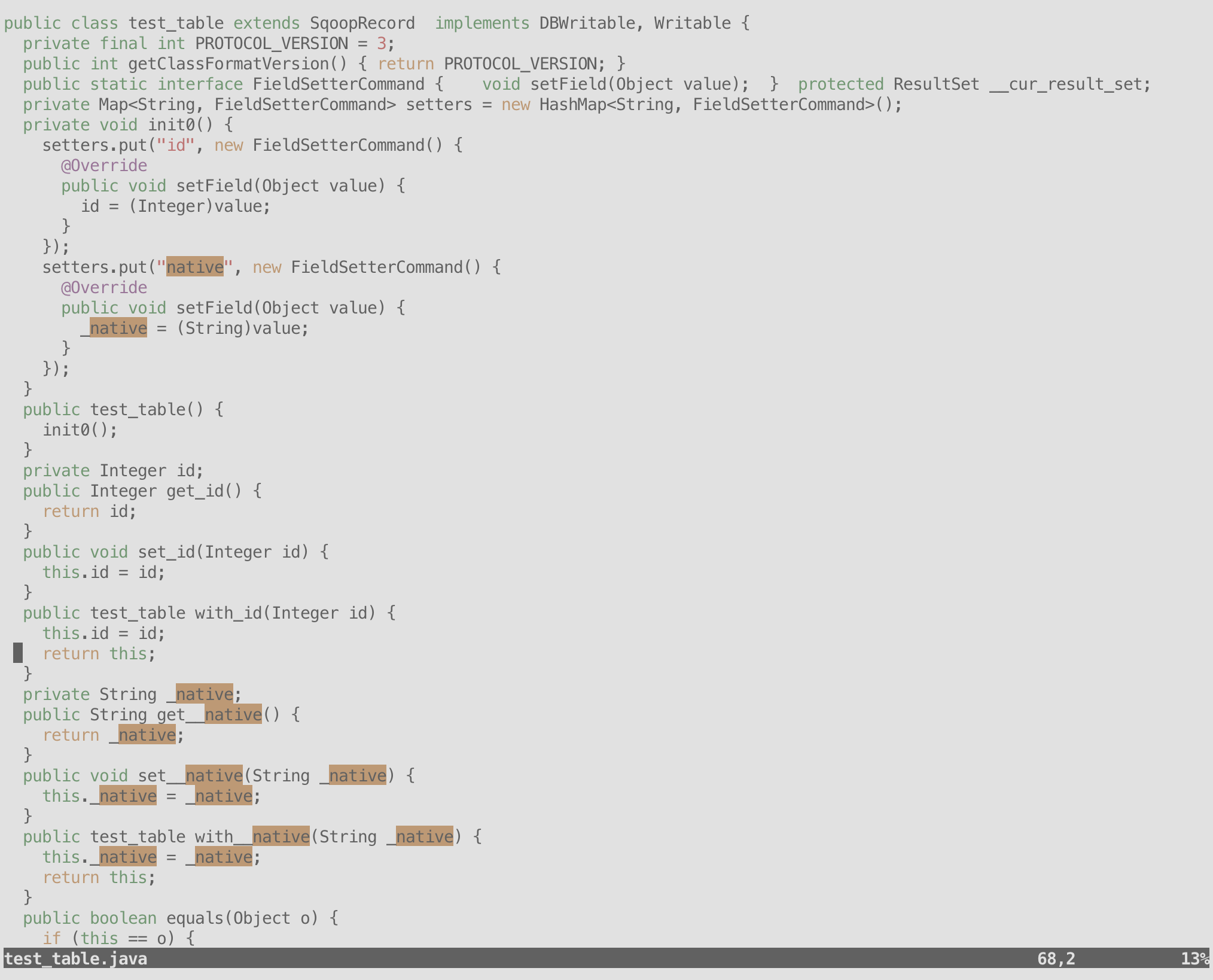

3. Generated class looks like this:

As you can see, Sqoop put _ in front of native since it's one of the Java keywords.

Conclusion

- Understanding internal of a system is crucial and always a satisfying experience.

- Sqoop has a step to generate Java ORM classes before it do the actual import in MapReduce.

- Warning log that says "this column has been renamed because ..." could be helpful.